Learn Before

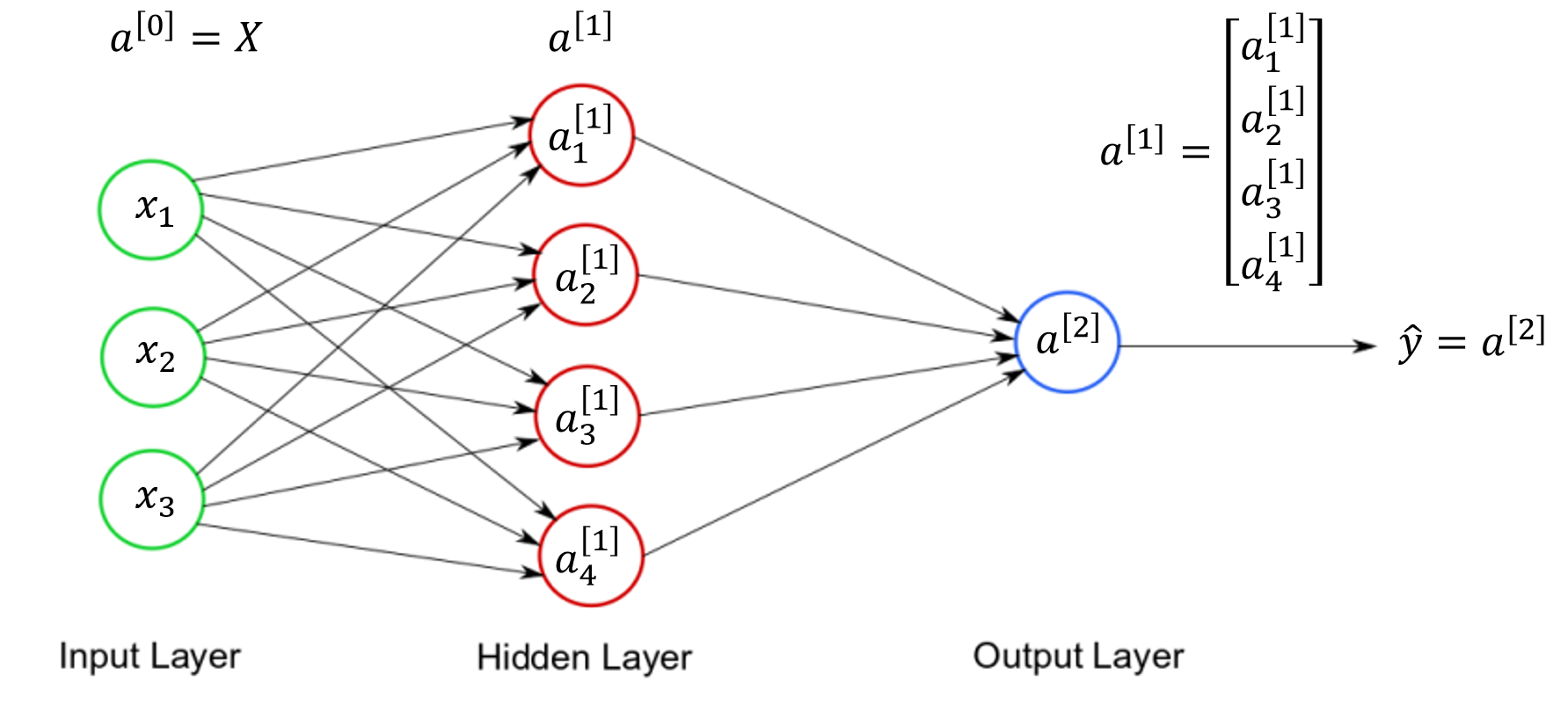

Connection between the Layers of Neural Network

Artificial Neural Networks Formulation

List of Common Hyperparameters in Deep Learning

Activation Functions in Neural Networks

In a neural network, numeric data points, called inputs, are fed into the neurons in the input layer. Each neuron has a weight, and multiplying the input number with the weight gives the output of the neuron, which is transferred to the next layer. The activation function is a mathematical “gate” in between the input feeding the current neuron and its output going to the next layer. It can be as simple as a step function that turns the neuron output on and off, depending on a rule or threshold. Or it can be a transformation that maps the input signals into output signals that are needed for the neural network to function. Activation functions are mathematical equations that determine the output of a neural network. The function is attached to each neuron in the network, and determines whether it should be activated (“fired”) or not, based on whether each neuron’s input is relevant for the model’s prediction. Activation functions also help normalize the output of each neuron to a range between 1 and 0 or between -1 and 1. When used in a multilayer neural network, activation functions can be different for different layers.

0

4

Tags

Data Science

Foundations of Large Language Models Course

Computing Sciences

Related

Activation Functions in Neural Networks

Batch Normalization in Deep Learning

Matrix Degeneration

Activation Functions in Neural Networks

What does a neuron compute?

Depth and Width for Neural Networks

Dropout

Neural Network Learning Rate

Epochs in Machine Learning

Activation Functions in Neural Networks

: Regularization Rate in Deep Learning

Deep Learning Optimizer Algorithms

Batch Normalization in Deep Learning

Deep Learning Weight Initialization

Hyperparameters Tuning Methods in Deep Learning

Difference between Model Parameter and Model Hyperparameter

Learn After

Types of Activation Functions

Role of the Activation Functions in Neural Networks

How to Select an Activation Function

A neural network with multiple hidden layers is designed so that for every neuron, its output is simply the direct weighted sum of its inputs. No further mathematical transformation is applied to this sum before it is passed to the next layer. What is the most significant consequence of this design on the network's overall capability?

Diagnosing Neural Network Instability